Our team is collaborating with the University Hospital of Strasbourg, IHU Strasbourg, IRCAD and other partners to build datasets for various medical recognition tasks. Recommendations on how to combine our datasets and handle potential overlaps is available on this page.

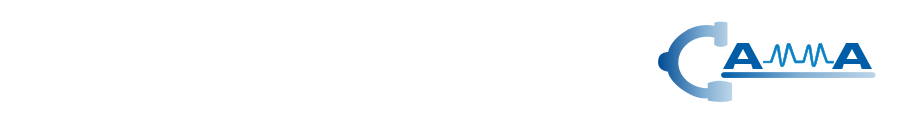

CholecTrack20 Dataset

CholecTrack20 is a dataset of 20 laparoscopic cholecystectomy videos, richly annotated for multi-class multi-tool tracking. It provides multi-perspective trajectories for tool visibility, intracorporeal movement, and life-long intraoperative paths. The dataset also includes annotations for surgical phases, scene visual challenges, and surgeon operators, making it a valuable resource for surgical vision research. More information is provided here as well as in the publication below:

- C.I. Nwoye, K. Elgohary, A. Srinivas, F. Zaid, J.L. Lavanchy, N. Padoy, CholecTrack20: A Multi-Perspective Tracking Dataset for Surgical Tools,Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), arXiv preprint, dataset 2025

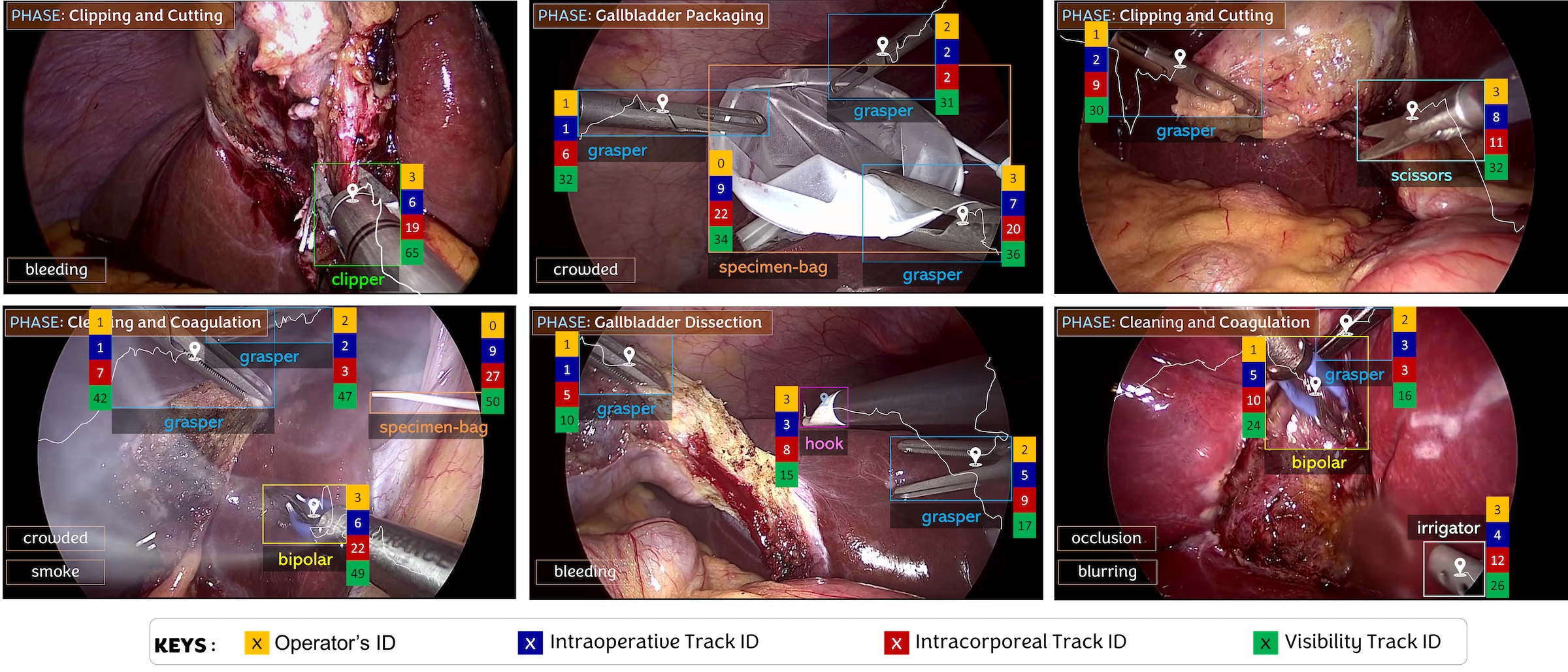

Endoscapes Dataset

Endoscapes is a dataset of 201 laparoscopic videos richly annotated for scene segmentation, object detection, and critical view of safety assessment. More information is provided here as well as in the publication below:

- A. Murali, D. Alapatt, P. Mascagni, A. Vardazaryan, A. Garcia, N. Okamoto, G. Costamagna, D. Mutter, J. Marescaux, B. Dallemagne, N. Padoy, The Endoscapes Dataset for Surgical Scene Segmentation, Object Detection, and Critical View of Safety Assessment: Official Splits and Benchmark, arXiv preprint, dataset 2023

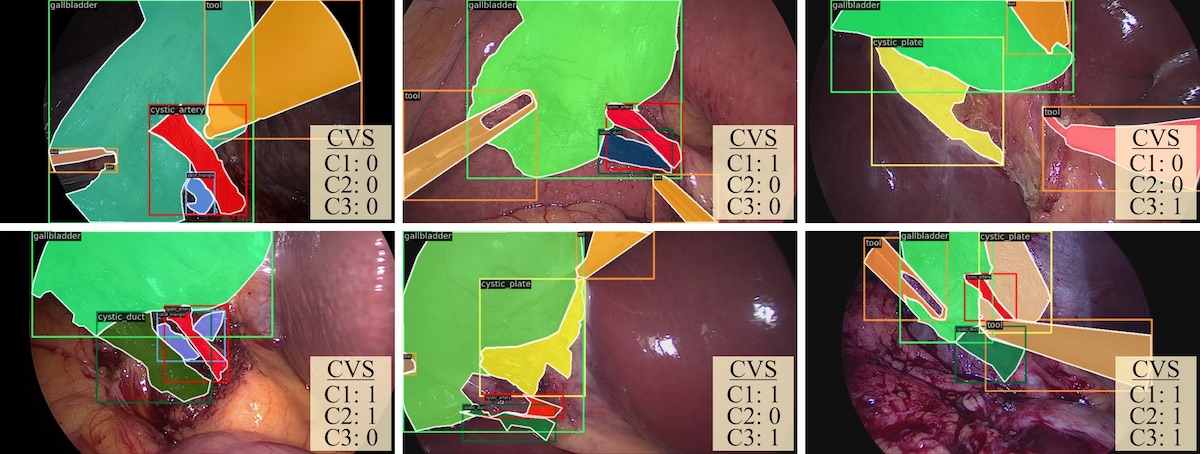

SSG-VQA Dataset

SSG-VQA is a Visual Question Answering dataset on laparoscopic videos providing diverse, geometrically grounded, unbiased and surgical action-oriented queries generated using surgical scene graphs. More information is provided here and in the publication below:

- K. Yuan, M. Kattel, J. Lavanchy, N. Navab, V. Srivastav, N. Padoy, Advancing Surgical VQA with Scene Graph Knowledge , IPCAI, arXiv preprint, dataset, 2023

MultiBypass140 Dataset

MultiBypass140 is a multicentric dataset of endoscopic video of laparoscopic Roux-en-Y gastric bypass surgery for research on multi-level surgical activity recognition, specifically phases, steps, and interaoperative adverse events (IAEs). More information is provided here and in the publications below:

- J. Lavanchy, S. Ramesh, D. Dall’Alba, C. Gonzalez, P. Fiorini, B. Muller-Stich, P. C. Nett, J. Marescaux, D. Mutter, N. Padoy, Challenges in Multi-centric Generalization: Phase and Step Recognition in Roux-en-Y Gastric Bypass Surgery, arXiv preprint, dataset, 2023

- R. Bose, C. Nwoye, J. Lazo, J. Lavanchy, N. Padoy, Feature Mixing Approach for Detecting Intraoperative Adverse Events in laparoscopic Roux-en-Y gastric bypass surgery, Proceedings of Medical Image Computing and Computer-Assisted Intervention (MICCAI), arXiv preprint, 2025

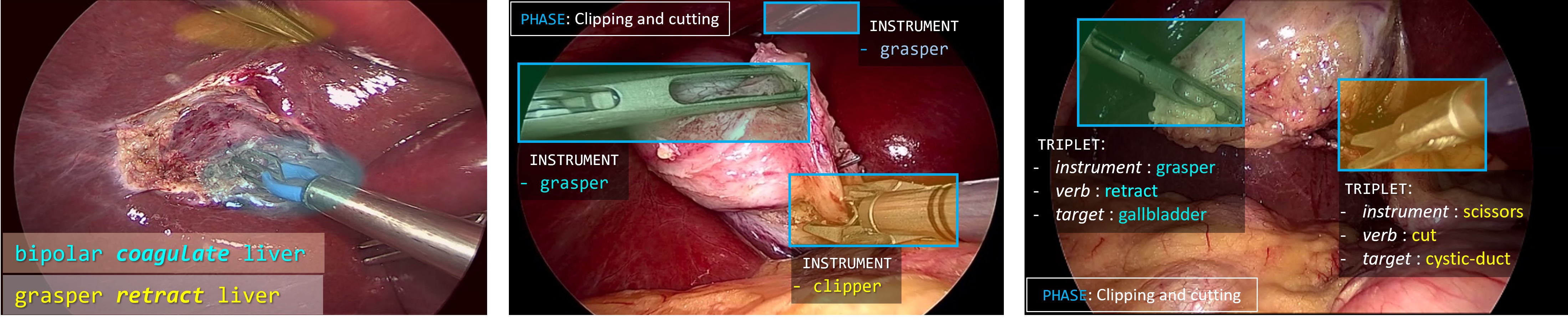

CholecT50 Dataset

CholecT50 is a dataset of 50 endoscopic videos of laparoscopic cholecystectomy surgery introduced to foster research on fine-grained activity recognition using surgical action triplets. It is annotated (at 1 fps) with 100 triplet categories in the format < instrument, verb, target>. The phase labels are also provided. Bounding box labels are provided for a subset of 5 videos. CholecT45 is a subset of CholecT50 with 45 videos and the first official release of the dataset.

For more information and to download the CholecT50 dataset, please kindly check our github repository (the previous version CholecT45 is available here).

The dataset is associated with the publication [Nwoye:MedIA2022]. If you use this data, you are kindly requested to cite the work that led to the generation of the dataset:

- C.I. Nwoye, T. Yu, C. Gonzalez, B. Seeliger, P. Mascagni, D. Mutter, J. Marescaux, N. Padoy, Rendezvous: Attention Mechanisms for the Recognition of Surgical Action Triplets in Endoscopic Videos, Elsevier Medical Image Analysis, arXiv preprint, 2022.

The official data splits for research and development of deep learning models on the CholecT50/CholecT45 datasets are described in:

- C.I. Nwoye, N. Padoy, Data Splits and Metrics for Benchmarking Methods on Surgical Action Triplet Datasets, arXiv preprint, 2022.

The data was used in the CholecTriplet2021 and CholecTriplet2022 challenges. The detailed results of the challenges can be found in these first and second publications.

The relationship between CholecT50 and Cholec80 is described here and here.

CholecT45 has been released publicly on April 12, 2022. CholecT50 has been released publicly on February 20, 2023.

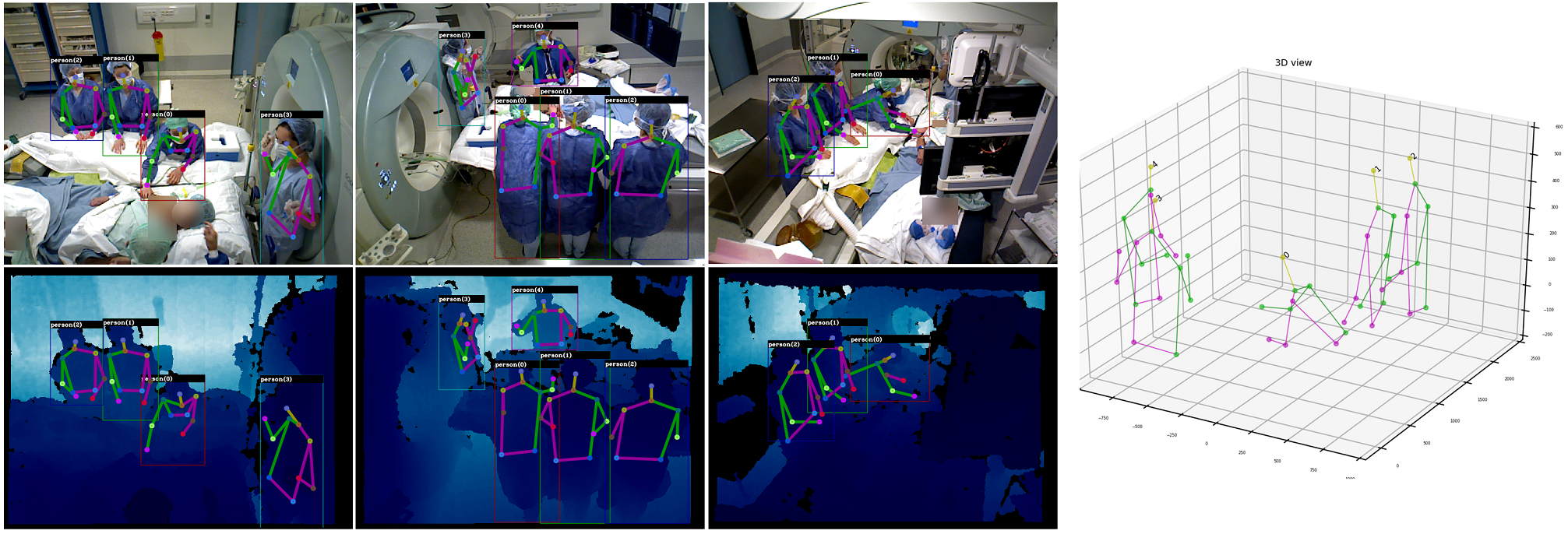

MVOR Dataset

To foster the development of human pose estimation methods and their applications in the Operating Room (OR), we release the Multi-View Operating Room (MVOR) dataset, the first public dataset recorded during real clinical interventions. It consists of synchronized multi-view frames recorded by three RGB-D cameras in a hybrid OR. It also includes the visual challenges present in such environments, such as occlusions and clutter. We provide camera calibration parameters, color and depth frames, human bounding boxes, and 2D/3D pose annotations.

For more information and to download the MVOR images, the annotation files as well as visualization/evaluation code, please kindly check our github repository. The MVOR dataset is associated with the publication [Srivastav:LABEL2018]. If you use this data, you are kindly requested to cite the work that led to the generation of the dataset:

- V. Srivastav, T. Issenhuth, A. Kadkhodamohammadi, M. de Mathelin, A. Gangi, N. Padoy, MVOR: A Multi-view RGB-D Operating Room Dataset for 2D and 3D Human Pose Estimation, MICCAI-LABELS, arXiv preprint, 2018

Cholec80 Dataset

The Cholec80 dataset contains 80 videos of cholecystectomy surgeries performed by 13 surgeons. The videos are captured at 25 fps. The dataset is labeled with the phase (at 25 fps) and tool presence annotations (at 1 fps). The phases have been defined by a senior surgeon in our partner hospital. Since the tools are sometimes hardly visible in the images and thus difficult to be recognized visually, we define a tool as present in an image if at least half of the tool tip is visible.

This dataset has been released under license CC-BY-NC-SA 4.0. If you wish to have access to this dataset, please kindly fill the request form.

This dataset is associated with the publication [Twinanda:TMI2016]. If you use this data, you are kindly requested to cite the work that led to the generation of this dataset:

- A.P. Twinanda, S. Shehata, D. Mutter, J. Marescaux, M. de Mathelin, N. Padoy, EndoNet: A Deep Architecture for Recognition Tasks on Laparoscopic Videos, IEEE Transactions on Medical Imaging (TMI), arXiv preprint, 2017

M2CAI 2016 Challenge Datasets

These datasets were generated for the M2CAI challenges, a satellite event of MICCAI 2016 in Athens. Two datasets are available for two different challenges: m2cai16-workflow for the surgical workflow challenge and m2cai16-tool for the surgical tool detection challenge. Some of the videos are taken from the Cholec80 dataset. We invite the reader to go to the M2CAI challenge page for more details regarding the dataset and the results of the past challenges. In order to maintain the ranking of the methods evaluated on these datasets, please kindly send us your quantitative results along with the corresponding technical report to: m2cai2016@gmail.com.

m2cai16-workflow dataset. This dataset is the result of collaborations with the University Hospital of Strasbourg and with the Hospital Klinikum Rechts der Isar in Munich. It contains 41 laparoscopic videos of cholecystectomy procedures. To gain access to the dataset, please kindly fill the following form: m2cai16-workflow request. To see the results of various methods, please visit the following web page: m2cai16-workflow results. If you use this dataset, you are kindly requested to cite both of the following publications that led to the generation of the dataset:

- A.P. Twinanda, S. Shehata, D. Mutter, J. Marescaux, M. de Mathelin, N. Padoy, EndoNet: A Deep Architecture for Recognition Tasks on Laparoscopic Videos, IEEE Transactions on Medical Imaging (TMI), arXiv preprint, 2017

- R. Stauder, D. Ostler, M. Kranzfelder, S. Koller, H. Feußner, N. Navab. The TUM LapChole dataset for the M2CAI 2016 workflow challenge. CoRR, vol. abs/1610.09278, 2016.

m2cai16-tool dataset. This dataset was generated through a collaboration with the University Hospital of Strasbourg. It contains 15 laparoscopic videos of cholecystectomy procedures. To gain access to the dataset, please kindly fill the following form: m2cai16-tool request. To see the results of various methods, please visit the following web page: m2cai16-tool results. If you use this dataset, you are kindly requested to cite the work that led to the generation of the dataset:

- A.P. Twinanda, S. Shehata, D. Mutter, J. Marescaux, M. de Mathelin, N. Padoy, EndoNet: A Deep Architecture for Recognition Tasks on Laparoscopic Videos, IEEE Transactions on Medical Imaging (TMI), arXiv preprint, 2017

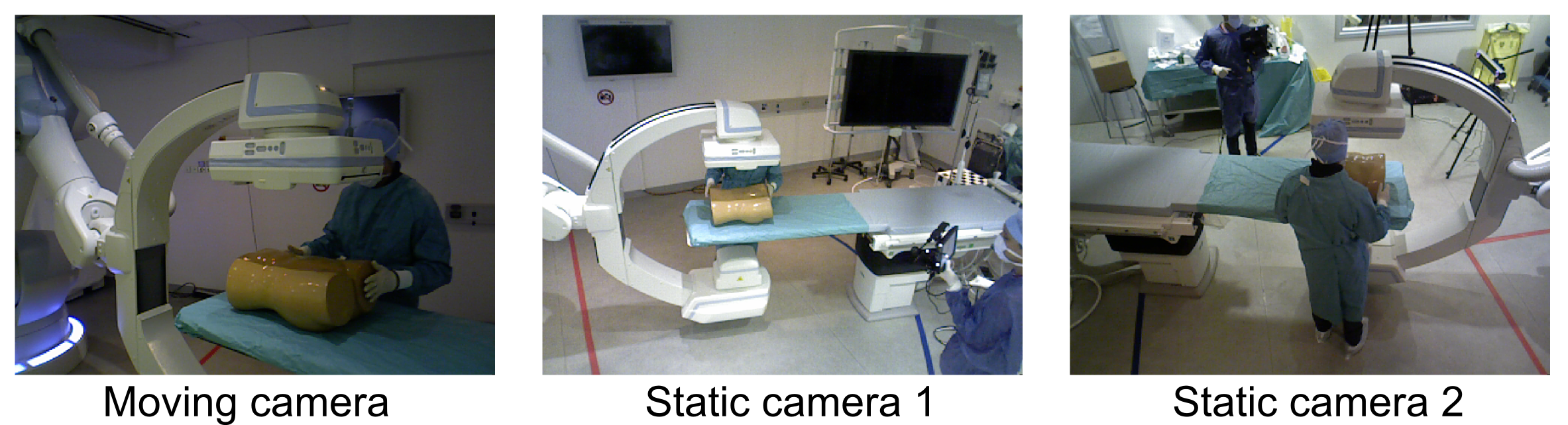

xawAR16 Dataset

This is a multi-RGBD camera dataset, generated inside an operating room (IHU Strasbourg), which was designed to evaluate tracking/relocalization of a hand-held moving camera. Three RGBD cameras (Asus Xtion Pro Live) were used to record such a dataset. Two of them are rigidly mounted to the ceiling in a configuration allowing them to capture views from each side of the operating table. A third one is fixed to a display, which is held by a user who moves around the room. A reflective passive marker is attached to the moving camera and its ground-truth pose is obtained with a real-time optical 3D measurement system (infiniTrack system from Atracsys).

The dataset is composed of 16 sequences of time-synchronized color and depth images in full sensor resolution (640×480) recorded at 25 fps, along with the ground-truth poses of the moving camera measured by the tracking device at 30 Hz. Each sequence shows different scene configurations and camera motion, including occlusions, motion in the scene and abrupt viewpoint changes.

More information and instructions on how to gain access to this dataset can be found here.