PhD and Post-doctoral Positions

We are currently looking for several PhD students and post-doctoral fellows in Computer Vision/Artificial Intelligence:

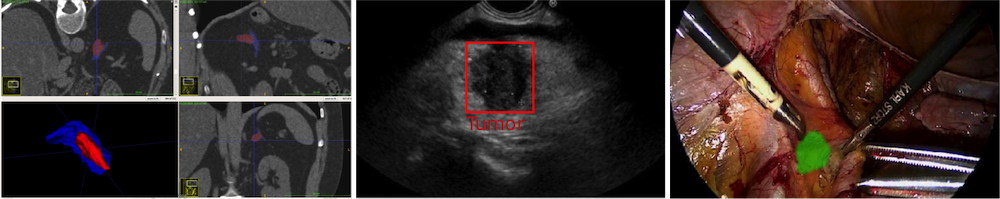

(1) Phd & PostDoc positions: Multi-modality Learning from Video & Text for Large Scale Surgical Video Analysis

(2) Phd position: Holistic Surgical Scene Analysis from Multi-modal Operating Room Data

(3) PostDoc position: Quantitative Medical Imaging Biomarkers

Please feel free to contact Nicolas Padoy if you are interested in another research topic in AI for healthcare.

Research Fellowships for Clinicians

We have positions for clinicians interested to join our team and work closely with our researchers on clinical AI projects during 12-24 months. Please send an email to Nicolas Padoy with a letter of motivation, curriculum vitae and list of publications if interested.

Research Engineer Positions

We are have positions open for research engineers with background in medical image analysis, computer aided surgery, surgical data science or federated learning interested to develop and support our prototypes. More information is available here.

Engineering Position in APEUS Project

We have a position open in the APEUS project for endoscopic ultrasound video analysis. More information is available here.

Internships

We are offering several internship positions / Master’s theses for motivated students interested in contributing to the development of our computer vision system for the operating room. Proficiency in programming and English along with knowledge in computer vision or machine learning are expected. If you are interested in doing an internship in an area different from the ones listed below, please send Nicolas Padoy a short email including a description of your interests, curriculum vitae and academic transcripts. All positions are funded and have a duration from 5 to 6 months. Successful candidates will be hosted within the IHU institute at the University Hospital of Strasbourg. He/She will thereby have direct contact with clinicians and also have access to an exceptional research environment.

To apply: please send an email to the listed contact describing your interests and motivation along with your curriculum vitae and academic transcripts.

Topics:

| Representation Learning from Multi-modal Data (Vision and Language) | |

| Propose methods to learn effective representations from open surgical knowledge such as video lectures and textbooks. Apply these representations to solve recognition tasks on endoscopic videos with minimum supervision and develop foundation models for surgery. Combine these methods with differentiable semantic models of surgeries, such as scene graphs representations. (contact) |

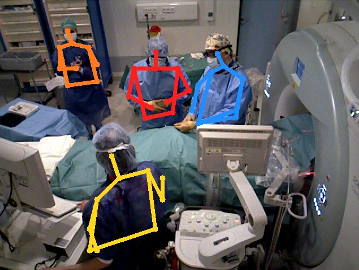

| Deep Learning for Activity Recognition in Large Surgical Video Databases | |

| Develop an approach to model, detect and recognize the surgical activities occurring within the hundreds of videos from our constantly growing surgical database. The approach will focus either on endoscopic videos or on multiple external RGBD views capturing the clinicians' activities. It will also be integrated into our live demonstrator for the operating room. (contact) |

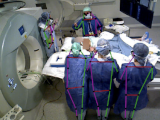

| Multi-view Human Body Tracking / Activity Analysis for the Operating Room | |

| Design a robust method to estimate the 3D body poses of clinicians or recognize their activities using a multi-RGBD camera system mounted directly in the operating room of our clinical partner. The approach will have to address several issues related to clutter, occlusions and temporal tracking. (contact) |

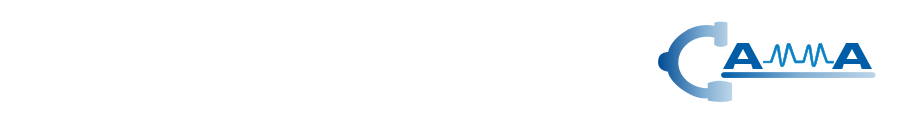

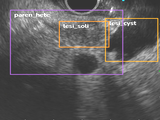

| Anatomy Detection Algorithms from Endoscopic Ultrasound | |

| Propose new algorithms for the analysis of Endoscopic Ultrasound (EUS) videos. The project requires developing computer vision and machine learning algorithms for the analysis of EUS videos of pancreatic anatomy. Viable solutions are likely to transfer to clinical practice and actively assist clinicians on Ultrasound based anatomy recognition. (contact) |

| 3D Visualization of X-ray Propagation for Radiation Safety Analysis | |

| Propose a method for the intuitive visualization of the 3D radiation risk in the interventional radiology room using the Microsoft HoloLens head-mounted display. The method will rely on our ceiling-mounted multi-RGBD camera system and be implemented on GPU to enable quick interactions between the clinicians and the monitoring system. (contact) |

| Computer Vision for Histopathological Diagnosis | |

| Pathology is the gold standard for the understanding of micro-biological phenomena based on the examination of body tissues. The project considers developing algorithms for the analysis of samples of cells to identify structural properties of the tissue. To that end, we use image segmentation algorithms to identify cells and graph-based algorithms for the analysis of their geometric structure. (contact) |

| Raspberry Pi based Multi-view Camera System for Real-time Deep Learning Activity Analysis in Operating Room |

|

| Development of an electronic board system based on Raspberry Pi allowing the acquisition of synchronised video data streams from RGB-D (Intel RealSense) cameras. This system will make it possible to obtain the 3D spatial positions of the various elements of an operating room scene such as the clinical staff and the surgical systems (respirator, endoscopy columns, ...) using state-of-the-art neural network detection algorithms. (contact) |

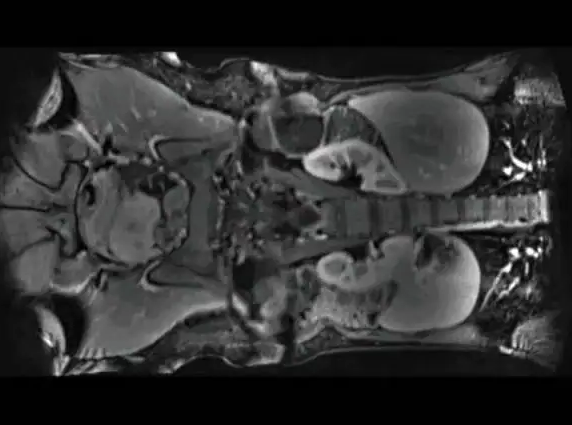

| Machine Learning for Medical Image Analysis |

|

| Today’s operating room (OR) has been transformed into a convoluted setting of machines, surgeons, nurses, and patients. Large amounts of data are generated just in a single operation. These data are temporal and multimodal (e.g. endoscopy videos, radiological, physiological, human movement, etc.) providing a rich context of the operation. In this project, the intern will research self-supervision , weak supervision, multimodal fusion methods to segment, classify or analyze medical images such as CT and MRI scans. (contact) |

| Development of Intelligent Annotation Functions in a Web-based Collaborative Tool and Application to Deep Learning Video Analysis |

|

| In order to produce high quality medical databases, the annotation of elements such as videos or images is an essential but unfortunately extremely time consuming task. We are developing a web-based collaborative solution (MOSaiC) that allows surgeons and endoscopists to efficiently participate in this crucial phase for artificial intelligence systems. The development of functions to improve this efficiency (semi-automated segmentation, intelligent annotation tools, etc.) will reduce annotation time while ensuring the necessary quality. (contact) |

| Federated Learning Methods for Medical Images and Videos | |

| Federated Learning (FL) is a new technique that has been proposed to circumvent concerns related to privacy and data ownership during machine learning. Our lab is developing infrastructure for rich temporal multi-modal data in the operating room, and we are developing methods that can help us improve efficiency of FL algorithms. The intern will have the opportunity to work with a group of scientists and clinicians and research novel algorithms related to privacy preserving approaches, noisy data and self-supervision in FL settings. (contact) |

More details and other topics available upon request. Illustrative videos related to several projects are available here.