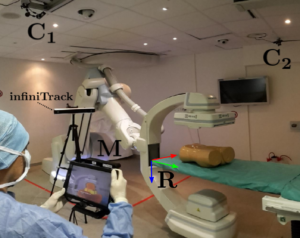

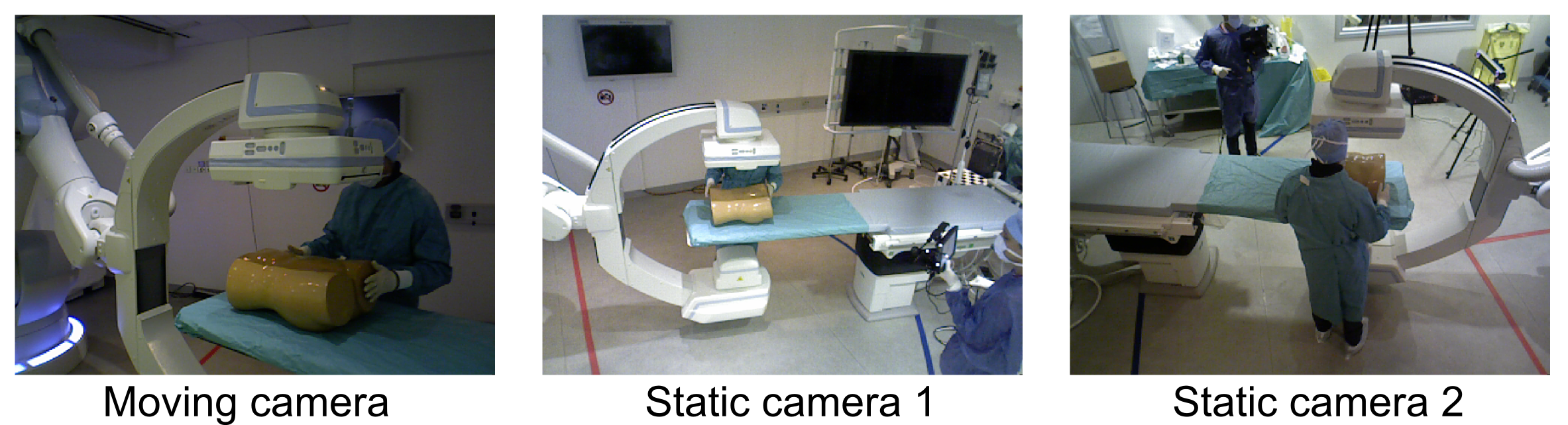

xawAR16: A multi-RGBD camera dataset for camera relocalization evaluation in the operating room  We provide a large multi-RGBD camera dataset, which was generated inside an Operating Room (IHU Strasbourg, France) containing a robotized X-ray imaging device, an operating table and other medical equipment in the background. Such a dataset has been designed to evaluate the tracking/relocalization of a hand-held camera, which is moved freely at both sides of the operating table under different scene configurations. All necessary calibration matrices and sample scripts to show how to make use of the data are provided. Three RGBD cameras (Asus Xtion Pro Live) were used to record this dataset. Two of them are rigidly mounted to the ceiling in a configuration allowing them to capture views from each side of the operating table. A third one is fixed to a display, which is held by a user while he moves around the room. A reflective passive marker is attached to the moving camera and its ground-truth pose is obtained with a real-time optical 3D measurement system (infiniTrack system from Atracsys). The setup is shown in the figure below:

We provide a large multi-RGBD camera dataset, which was generated inside an Operating Room (IHU Strasbourg, France) containing a robotized X-ray imaging device, an operating table and other medical equipment in the background. Such a dataset has been designed to evaluate the tracking/relocalization of a hand-held camera, which is moved freely at both sides of the operating table under different scene configurations. All necessary calibration matrices and sample scripts to show how to make use of the data are provided. Three RGBD cameras (Asus Xtion Pro Live) were used to record this dataset. Two of them are rigidly mounted to the ceiling in a configuration allowing them to capture views from each side of the operating table. A third one is fixed to a display, which is held by a user while he moves around the room. A reflective passive marker is attached to the moving camera and its ground-truth pose is obtained with a real-time optical 3D measurement system (infiniTrack system from Atracsys). The setup is shown in the figure below:

The dataset is composed of 16 sequences of time-synchronized color and depth images in full sensor resolution (640×480) recorded at 25 fps, along with the ground-truth poses of the moving camera measured by the tracking device at 30 Hz. Each sequence shows different scene configurations and camera motion, including occlusions, motion in the scene and abrupt viewpoint changes.

Licence and download link

If you find this data interesting and are planning to use it for your work, you are kindly requested to cite the work that led to the generation of this dataset:

N. Loy Rodas, F. Barrera, N. Padoy, See It With Your Own Eyes: Marker-less Mobile Augmented Reality for Radiation Awareness in the Hybrid Room, IEEE Transactions on Biomedical Engineering (TBME), Volume: 64, Issue: 2, Pages: 429 – 440, Feb. 2017 (online version), doi:10.1109/TBME.2016.2560761, 2016

The xawAR16 dataset is publicly released under the Creative Commons licence CC-BY-NC-SA 4.0. This implies that:

– the dataset cannot be used for commercial purposes,

– the dataset can be transformed, with the condition that any changes are indicated and your contributions must be distributed under the same licence as the original,

– the dataset can be redistributed as long as it is redistributed under the same license with the obligation to cite the contributing work which led to the generation of the xawAR16 dataset (mentioned above).

By downloading and using this dataset, you agree on these terms and conditions.

Download:

This dataset has been released. To receive an email with a link to download the dataset, please kindly fill the form found here.

For any questions and/or comments on this dataset or the paper please send an email to: nloyrodas@unistra.fr.

Detailed information about xawAR16 dataset

File formats:

- Color images are stored as 640 x 480 8-bit RGB images in PNG format

- Depth maps are stored as 640 x 480 16-bit grey-scale images in PNG format

- Depth maps pixel values are in millimeters, namely a value of 1000 corresponds to a distance of 1 meter to the camera.

- Synchronization between the color and depth images per camera is provided per sequence as a .txt file named as the camera’s serial number.

- The images are contained in a folder named as the corresponding camera’s serial number: 1206120016 for the moving camera, 1205220174 and 1205230137 for respectively the ceiling-mounted (static) cameras 1 and 2.

- A file to synchronize the images of the 3 cameras is provided per sequence: multiCameraSynchronization.txt. Taking as reference the moving camera (1206120016) it gives the corresponding indices for the other two cameras.

- All calibration and pose matrices (extrinsics, moving camera pose and ground-truth registration) are provided as 4 x 4 homogenous transformation matrices.

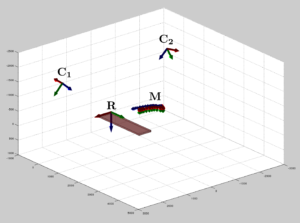

- The ground-truth poses are referred to the global room reference frame centered at the right corner of the operating table and oriented as shown in the figure.

Included sequences

- The dataset is divided depending on which side of the X-ray imaging device the moving camera was held: right or left.

- The sequences include several scenarios classified as follows:

- Smooth: the moving camera is moved in a smooth trajectory including several loop closures and without occlusions in the view (good for debugging purposes).

- Clinician: smooth sequence with a clinician operating near the patient and therefore occluding the view of the moving camera.

- Challenging: sequence of type Clinician but in which the trajectory of the moving camera is interrupted by large motions and abrupt viewpoint changes.

- Device rotation: the robotized X-ray imaging device rotated during the sequence (full or partial rotation).

- Each type of sequence is repeated for different X-ray imaging device’s configurations. The standardized naming convention in interventional radiology is used to refer the device’s configuration in each sequence: PA (posterior-anterior), AP (anterior-posterior) and RAO (right anterior oblique at 45⁰ or 135⁰).

| Sequence ID | OR side | Description | Name | Length (s) | Download link |

|---|---|---|---|---|---|

| 0 | Right | Smooth | R_PA_Smooth | 91 | |

| 1 | Smooth | R_AP_Smooth | 41 | ||

| 2 | Smooth | R_RAO135_Smooth | 57 | ||

| 3 | Clinician | R_PA_Clinician | 125 | ||

| 4 | Clinician | R_AP_Clinician | 27 | ||

| 5 | Device rotation | R_CT_Challenging | 64 | ||

| 6 | Challenging | R_PA_Challenging | 96 | ||

| 7 | Challenging | R_RAO135_Challenging | 107 | ||

| 8 | Left | Smooth | L_PA_Smooth | 47 | |

| 9 | Smooth | L_RAO45_Smooth | 53 | ||

| 10 | Smooth | L_RAO135_Smooth | 60 | ||

| 11 | Clinician | L_AP_Clinician | 65 | ||

| 12 | Clinician | L_CT_Clinician | 46 | ||

| 13 | Device rotation | L_CT_Smooth | 91 | ||

| 14 | Challenging | L_PA_Challenging | 83 | ||

| 15 | Challenging | L_RAO45_Challenging | 57 |

- Extrinsics:

- Allow to register the ceiling-mounted cameras’ images to the global room reference frame

- Named as camera-serialNum.txt (1205220174.txt or 1205230137.txt)

- infiniTracker:

- Transformations necessary to register the ground-truth poses to the room reference frame.

- Divided according to the side of the operating table where the optical tracking system is installed (right or left side depending on the sequence).

- The following transformations are provided:

- fiducial_to_Cam.txt: rigid transformation from the fiducial’s reference frame to the RGB camera attached to the handheld screen.

- ir_left_infiniTrack-room.txt: rigid transformation from the fiducial’s reference frame to the infrared stereo camera system of the infiniTrack.

- Refinement.txt (optional): small transformation computed to correct a small deviation due to calibration errors.

Sample scripts A set of MatLab sample scripts are provided to show how to use the provided data.

Dataset_sample.m

- Sample illustrating how to apply the different transformations (extrinsics, calibration…), which enable to register the ground-truth moving camera poses with the global room reference frame.

- An animation of the displacement of the moving camera as read from the ground-truth poses in a virtual environment is shown (see figure below):

Evaluation_sample.m

- Sample script showing how to evaluate the tracking of the moving camera and the ground-truth poses with the metrics used in our paper: relative pose error (RPE) and absolute pose error (APE).

- Sample results from our algorithm applied on sequence R_PA_Smooth are provided to illustrate the evaluation approach.